In the Matter of Zoom (Federal Trade Commission 2023)

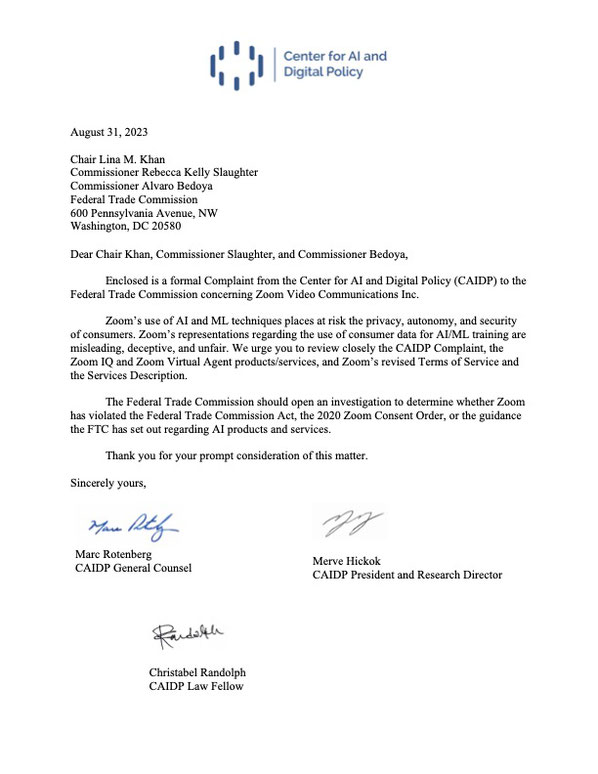

Zoom’s use of AI and ML techniques places at risk the privacy, autonomy, and security

of consumers. Zoom’s representations regarding the use of consumer data for AI/ML training are

misleading, deceptive, and unfair. The Federal Trade Commission should open an investigation to determine whether Zoom has violated the Federal Trade Commission Act, the 2020 Zoom Consent Order, or the guidance the FTC has set out regarding AI products and services.

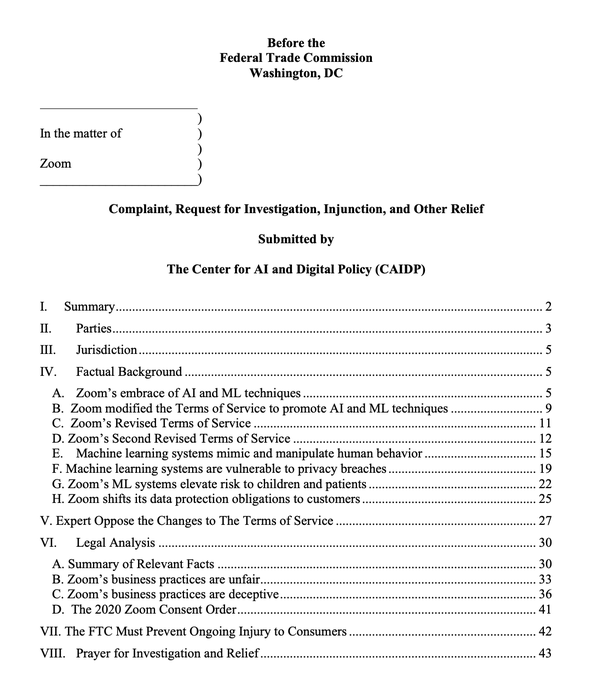

BREAKING - CAIDP Files FTC complaint about Zoom's AI-based services (Aug. 31, 2023)

- CAIDP has sent a 45-page complaint to the Federal Trade Commission, alleging that Zoom has violated the FTC Act, the 2020 Consent Order, and the business guidance that the FTC has set out for AI products and services.

- CAIDP's concern focuses on the collection of consumer data and consumer behavior for Machine Learning (ML). CAIDP alleges that Zoom has improperly collected consumer data and that its ML services violate an earlier consent order with the FTC.

- CAIDP warned that ML technique "mimic and manipulate human behavior"

- CAIDP also warned that ML systems are vulnerable to data breaches.

- CAIDP has urged the FTC to open an investigation and determine whether Zoom has violated the FTC Act, the 2020 Zoom Consent Order, or the guidance the FTC has set out regarding AI products and services.

CAIDP Zoom Complaint (FTC, Aug 31, 2023)

CAIDP Letter to FTC (Aug. 31, 2023)

CAIDP Press Release (Sept. 1, 2023)

The FTC and AI Policy

Over the last several years, the FTC has issued several reports and policy guidelines concerning marketing and advertising of AI-related products and services. We believe that OpenAI should be required by the FTC to comply with these guidelines.

FTC Keep your AI claims in check (2023)

When you talk about AI in your advertising, the FTC may be wondering, among other things:

- Are you exaggerating what your AI product can do?

- Are you promising that your AI product does something better than a non-AI product?

- Are you aware of the risks?

- Does the product actually use AI at all?

Aiming for truth, fairness, and equity in your company’s use of AI (2021)

In 2021, the FTC warned that advances in Artificial Intelligence "has highlighted how apparently “neutral” technology can produce troubling outcomes – including discrimination by race or other legally protected classes." The FTC explained it has decades of experience enforcing three laws important to developers and users of AI:

- Section 5 of the FTC Act

- Fair Credit Reporting Act

- Equal Credit Opportunity Act

The FTC said its recent work on AI – coupled with FTC enforcement actions – offers important lessons on using AI truthfully, fairly, and equitably.

- Start with the right foundation

- Watch out for discriminatory outcomes

- Embrace transparency and independenc

- Don’t exaggerate what your algorithm can do or whether it can deliver fair or unbiased results

- Tell the truth about how you use data

- Do more good than harm

- Hold yourself accountable – or be ready for the FTC to do it for you

Using Artificial Intelligence and Algorithm (2020)

" . . . we at the FTC have long experience dealing with the challenges presented by the use of data and algorithms to make decisions about consumers. Over the years, the FTC has brought many cases alleging violations of the laws we enforce involving AI and automated decision-making, and have investigated numerous companies in this space.

"The FTC’s law enforcement actions, studies, and guidance emphasize that the use of AI tools should be transparent, explainable, fair, and empirically sound, while fostering accountability. We believe that our experience, as well as existing laws, can offer important lessons about how companies can manage the consumer protection risks of AI and algorithms."

- Be transparent.

-

- Don’t deceive consumers about how you use automated tools

- Be transparent when collecting sensitive data

- If you make automated decisions based on information from a third-party vendor, you may be required to provide the consumer with an “adverse action” notice

- Explain your decision to the consumer.

-

- If you deny consumers something of value based on algorithmic decision-making, explain why

- If you use algorithms to assign risk scores to consumers, also disclose the key factors that affected the score, rank ordered for importance

- If you might change the terms of a deal based on automated tools, make sure to tell consumers.

- Ensure that your decisions are fair.

-

- Don’t discriminate based on protected classes.

- Focus on inputs, but also on outcomes

- Give consumers access and an opportunity to correct information used to make decisions about them

- Ensure that your data and models are robust and empirically sound.

- Hold yourself accountable for compliance, ethics, fairness, and nondiscrimination.

FTC Report to Congress (2022)

FTC Report Warns About Using Artificial Intelligence to Combat Online Problems

Agency Concerned with AI Harms Such As Inaccuracy, Bias, Discrimination, and Commercial Surveillance Creep (June 16, 2022)

Today the Federal Trade Commission issued a report to Congress warning about using artificial intelligence (AI) to combat online problems and urging policymakers to exercise “great caution” about relying on it as a policy solution. The use of AI, particularly by big tech platforms and other companies, comes with limitations and problems of its own. The report outlines significant concerns that AI tools can be inaccurate, biased, and discriminatory by design and incentivize relying on increasingly invasive forms of commercial surveillance.

FTC Judgements Concerning AI Practices

On March 4, 2022, the Federal Trade Commission announced the settlement of a case against a company that had improperly collected children's data. Notably, the FTC required the company to destroy the data and any algorithms derived from the data.

On May 7, 2021, the Federal Trade Commission announced the settlement of a case with the developer of a photo app that allegedly deceived consumers about its use of facial recognition technology and its retention of the photos and videos of users who deactivated their accounts. Notably, the FTC required the company to delete the photos and videos of users who deactivated their accounts and the models and algorithms the companies developed.

- FTC Finalizes Settlement with Photo App Developer Related to Misuse of Facial Recognition Technology (May 7, 2021)

News Reports

CAIDP Resources

Testimony and statement for the Record, Merve Hickok, CAIDP Chair and Research Director

Advances in AI: Are We Ready For a Tech Revolution?

House Committee on Oversight and Accountability, March 6, 2023

Marc Rotenberg and Merve Hickok, Artificial Intelligence and Democratic Values: Next Steps for the United States

Council on Foreign Relations, August 22, 2023

Merve Hickok and Marc Rotenberg, The State of AI Policy: The Democratic Values Perspective

Turkish Policy Qaurtelry, March 4, 2022

Marc Rotenberg and Sunny Seon Kang, The Use of Algorithmic Decision Tools, Artificial Intelligence, and Predictive Analytics

Federal Trade Commission, August 20, 2018

Marc Rotenberg, In the Matter of HireVue, Complaint and Request for Investigation, Injunction, and Other Relief

Federal Trade Commission, November 6, 2019

Marc Rotenberg, In the Matter of Universal Tennis, Complaint and Request for Investigation, Injunction, and Other Relief

Federal Trade Commission, May 17, 2017